I've noticed a trend among the rationalist movement of favoring long and convoluted articles referencing other long and convoluted articles--the more inaccessible to the general public, the better.

I don't want to contend that there's anything inherently wrong with such articles, I contend precisely the opposite: there's nothing inherently wrong with short and direct articles.

One example of significant simplicity is Einstein's famous E=mc2 paper (Does the inertia of a body depend upon its energy-content?), which is merely three pages long.

Can anyone contend that Einstein's paper is either not significant or not straightforward?

It is also generally understood among writers that it's difficult to explain complex concepts in a simple way. And programmers do favor simpler code, and often transform complex code into simpler versions that achieve the same functionality in a process called code refactoring. Guess what... refactoring takes substantial effort.

The art of compressing complex ideas into succinct phrases is valued by the general population, and proof of that are quotes and memes.

“One should use common words to say uncommon things” ― Arthur Schopenhauer

There is power in simplicity.

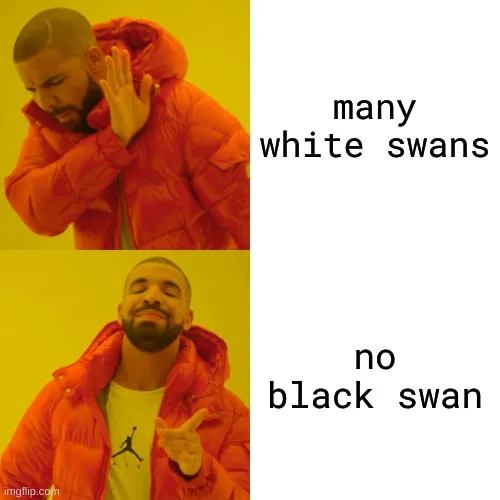

One example of simple ideas with extreme potential is Karl Popper's notion of falsifiability: don't try to prove your beliefs, try to disprove them. That simple principle solves important problems in epistemology, such as the problem of induction and the problem of demarcation. And you don't need to understand all the philosophy behind this notion, only that many white swans don't prove the proposition that all swans are white, but a single black swan does disprove it. So it's more profitable to look for black swans.

And we can use simple concepts to defend the power of simplicity.

We can use falsifiability to explain that many simple ideas being unconsequential doesn't prove the claim that all simple ideas are inconsequential, but a single consequential idea that is simple does disprove it.

Therefore I've proved that simple notions can be important.

Jump in the discussion.

No email address required.

Notes -

Because it's too simple. But if you try to do it in say two paragraphs you might be able to extract the gist of it.

I'm pretty sure I can come up with better versions of at least some of Scott Alexander's writings that are in fact simpler. I wouldn't be making the same points as him though.

People have too much ego though and think that their ideas cannot be explained better by other people, or even find it offensive for example if I claim I can explain something better than Scott Alexander. Why?

In open source projects programmers have to get rid of that ego, and other people constantly suggest ways to simply the code, sometimes rewrite it completely, and guess what the original author says... Thanks. I've made better versions of some big wig programmers and nobody finds it impossible or offensive. We all think differently and some people think of thinks we just don't. Why would that hurt anybody's ego?

Which is exactly what I said.

Then why didn't you do it! This is on a post where you are arguing about the benefits of simplicity and you can't be bothered to follow your own advice?

If you aren't making the same points as him, how is it a better version? It is a different piece of writing. That is like saying "I could write a simpler word processing program than microsoft word" and then you show us notepad. Of course it is simpler, but it is not the same thing.

Because sometimes you are removing features from a complex program, and claiming that you have made it simpler. Other people get annoyed because you have removed features.

I have about a decade of coding experience. This is not a new concept to me. But sometimes a junior programmer goes in a removes a critical piece of code to the functioning of the program, because they didn't understand why it was necessary. I have done this, and people under me have done this. Sometimes during code reviews I even thought "oh hey that looks much simpler than what I wrote, good on them". Only to come back and redo my complex set of code a week later when I realized what bug they caused.

In programming there is often a logical set of reasons why a particular piece of code exists. In writing that connection is a little more tenuous. Or if you want to maintain the coding metaphor think of a piece of writing as a bit like a piece of code, but it is a set of instructions for the human brain rather than a computer. Human brains vary quite a bit, and they are also a bit more emotional than most machines. So two obvious ways that writing would differ from code:

There would be parts of the writing that is necessary for some brains, and unnecessary for other brains. If a piece of writing is 'unnecessary' for your particular brain, it doesn't mean it is unnecessary for all brains. You could remove it, but you don't know who you have lost by doing so. Its possible you lost no one, its also possible you lost everyone else.

Emotions in the brain can change how people interpret things. Sometimes you need to set an emotional ambiance in writing to be interpreted correctly. To compare to programing, you need to import some packages first. But the brain is a run-time language, not compile time. So its just gonna take whatever whacky shit you give it and run with that. This changes writing by requiring introductory sections that set the mood. Scott does this in his writing, where he has an introductory section on controversial topics. Those intro sections are often meant to pull your head into the clouds, think big picture, and hopefully calm you down a little if you were coming in angry.

Because I cannot think what I do not think.

I did it as simple as I could. That doesn't mean other people cannot take my output and make it even simpler.

I do not have the mind of other people. Only my mind.

Because I would be making a more general point, that includes his point.

You don't understand what refactoring is. The codes has exactly the same functionality. That means no features are removed.

It does exactly the same thing, just with simpler code.

I'm not a junior programmer. I can spot when a piece of code is truly not doing anything.

Here's an example where I found a line of code that wasn't doing absolutely anything in Linux: lib/kstrtox.c: remove redundant cleanup. For some reason the best programmers in the word didn't see this in the core of Linux, but they agreed my assessment was correct.

I can show you much more complex examples where I reorganized the code and get rid of 50% of the code, and it still does exactly the same.

Why didn't they spot these issues? Because they don't have my mind.

Source code is for humans to read. Machine code is for computers. When I refactor code it's for other humans to be able to make sense of it in an easier way. When the source code is simpler, humans have an easier time understanding it and spotting problems.

I'm aware of how refactoring is supposed to work. Not everything goes perfectly with human endeavors.

I'm pointing out common failure modes, and your response is that they should just not fail.

And that's how it works, with people who do know how to refactor (e.g. I).

It's precisely the exact opposite: I'm saying it can work, especially by people who know how to do it. You responses imply that it's impossible to do it.

I said that people somehow find it offensive if somebody claims they can write a simpler version of something their idol Scott Alexander wrote and not lose anything in the process (like proper refactoring), and you seem to be offended by that notion. I did not even make the claim, I merely pointed out what would happen if I were to make the claim.

Is such notion so offensive that it must necessarily fail?

There is a failure mode that happens for code refactoring. I hope you agree that some people out there, maybe not you, can fail at things like refactoring.

Refactoring is often easy for code related things, because in the end there is a simple test for whether a refactoring has gone well: just run the code again and see if the results are the same.

Imagine if you were coding, but everyone had a different OS, a different browser, and different versions of the code libraries you were using. You can be certain that your code refactoring is safe for your machine at this given moment. But you don't know with certainty that it is also going to run on everyone else's machine.

My point has always been that writing is not so straightforward, and it is closer to the hellish existence where everyone has their own unique OS, browser, and code libraries.

The whole point of refactoring code is that it is doing the same thing in the end. My whole point about writing is that we rarely understand what the hell it is doing in the first place, much less what happens when we change it. Why did reading Scott's stuff convince me so easily, but when I shared it with friends it didn't change their minds at all? Why do you find Scott's writings too long, while I find the length just fine? It is because our minds are different.

I am not trying to argue the impossible case that no writing can ever be simplified. I would just say that the simplification is a lossy and imperfect form of data compression. And that what is being lost is probably not apparent to you, because you are probably stripping the things that your mind didn't need in the first place, but that others might have needed. If I or others seem "offended" that you claim to be able to write lossless compression of data, then think of it as the same "offense" that physicists feel towards people that claim to have invented perpetual motion machines.

Yes I do, because I follow good programming practices.

I can give you examples where I refactored code and I added unit tests to make sure that any and all changes I did retained exactly the same functionality the original code had. If it worked in someone's machine before, it should work in that machine afterwards.

This is not theoretical, I've done these refactoring, and the result works in millions of machines just fine. I can show you the commits.

Only if you don't follow good programming practices.

If you follow simple logically-independent steps, the process cannot fail.

But you can make a guess, and that guess can be right. That's what writing is.

No, not necessarily. Maybe 99.9% of the writers would lose something important in the simplification most of the time, but not all.

So you accept you consider it impossible.

You are not getting me with the whole programming metaphor. If you'd stop thinking that I was questioning your chops as a programmer for two seconds you'd maybe understand.

You have not committed a single piece of code in the theoretical world I made up where everyone has a unique OS. You have committed code in our world that just has basically three OS's you need to worry about.

In both worlds its not just about you following good coding practices or good writing practices. Its about the people writing the OSes also following good practices. In the theoretical world where there are a billion different OSes and they are nearly all written by amateurs, it doesn't matter how careful you are with your code, because its gonna be run on top of someone else's shitty code.

Does your program still work if the CPU doesn't know how to add 1+1? Or if the library running your code just randomly decides its gonna do garbage collection in its own special snowflake way and deletes a bunch of variables you need?

Your current programming ability relies on the fact that the computers it runs on are relatively stable and consistent.

Humans are not stable and consistent. Thus writing for them is not going to be the same as writing for a computer.

I shouldn't have written this last message. I'm done with this conversation. If you were correct about simple writing being effective then one of us should have convinced the other person in the first one or two exchanges.

Wrong. The code I've committed works on OS's you've never heard of.

You keep making assumptions regardless of how many times I've told you the reality.

That world is irrelevant.

No, it doesn't.

But they are real, not hypothetical. The idea-space of human readers is finite.

Only if the convincee was amenable to actually being convinced, and you already conceded you consider the proposition impossible, so there's no way anyone could have convinced you otherwise.

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link

More options

Context Copy link